High availability of services in the cloud – how to do it?

In this cloud encyclopedia article, we’ll talk about high availability design or high availability(HA). How to achieve it, what to assemble it from, how big should it be? What is called high availability – is it 99.5%, 99.9% or 99.95%? And is it just about the high availability of cloud services, or do other considerations like disaster recovery come into play? Let’s see what options we have in the cloud and what all needs to be taken into account.

Lukáš Hudeček

High availability of services – general characteristics and what is SLA

In general, high availability designs are based on business needs that most companies have defined in business continuity strategies, impact analyses and associated SLAs.

When planning for high availability, we must first define how high the availability should be. And we have to be based on what happens if part or all of the system doesn’t work. It is necessary to define all types of impacts – operational, financial, impacts on the relationship with the customer, overall impacts on the business.

From these definitions, the maximum permissible outage times are calculated. It will form the basis for an architectural design that will take into account the required levels of service availability called service-level agreement, hereafter referred to as SLA.

The SLA defines the parameters of the delivered service such as its description, operating time, reporting, security parameters, service performance measurement and, most importantly, service reliability and availability. In IT parlance, the SLA XX% usually refers to the percentage of service availability.

To illustrate, an SLA of 99.9%represents the maximum downtime:

- 1 min 26 sec per day

- 10 min 4 s per week

- 43 min 49 sec per month

- 2 h 11 min 29 quarterly

- 8 h 45 min 56 s per year

To achieve a high SLA, it is necessary to define a robust, resilient and reliable architectural design of the application or the entire environment so that all components used as a whole meet the required service level.

Generally, the whole system is only as robust as its weakest link, which tends to be the most common problem in trying to save money.

In addition, we need to design the architecture in such a way that all maintenance tasks take SLA maintenance into account, such as:

- updates and upgrades,

- data recovery in case of damage or hacker attack,

- service and environment deployment (CI/CD).

Impacts of outages

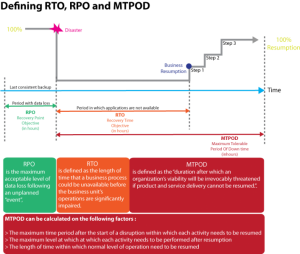

Achieving high availability (i.e., an SLA starting with “three nines”: 99.9%) is not cheap. The SLA value must therefore be based on a business impact analysis (BIA), which defines the impacts of the outage and the resulting RPO/RTO/MTPOD times they indicate:

- RPO (recovery point objective) – maximum time of data loss,

- RTO (recovery time objective) – maximum data recovery time,

- MTPOD (max tolerable period of down time) – the maximum recovery time of the entire system, including corrective steps after data recovery.

Quantification of the impact

After defining the RTO/RPO/MTPOD parameters, we need to have a quantified impact, i.e. how much it will cost if the systems do not run for e.g. 24/48/72 hours. Only then is the CFO (or whoever holds the budget) willing to discuss how robust a type of high availability implementation to deploy, or rather. what risks and potential financial losses must be accepted.

Fine/left

The last important parameter is what happens in case of SLA violation by the service provider. The penalty is usually set according to the duration of the service outage or the unavailability of the entire service. In most cases, the penalty is calculated as a maximum of one monthly payment for the service.

Because the financial loss in the event of a failure is many times higher than the penalty paid by the supplier, it is essential to design a truly robust and resilient design.

Service architecture in the cloud

Cloud providers allow users of their services to achieve a wide range of availability levels. Most of the time, however, this is not achieved by a magical service configuration slider, but by proper redundant architecture using multiple balanced components and placing them in multiple availability zones.

Therefore, when designing a cloud architecture, we will focus on the platform services (PaaS) discussed in this article. PaaS themselves meet the requirement of robustness, consist of multiple components and have defined SLAs. At the same time, PaaS can be operated in multiple availability zones, are scalable and have emergency scenarios, including backups and possible rapid re-deployment of the service.

When using infrastructure services of the classic VM type, it is a good idea to consider placing them in a scale-set. This allows you to ensure that additional instances are started automatically, as scheduled, and also supports availability zones. In the same way, we have to configure all the storage, from the image I’m deploying from to the actual managed disks under each VM through the availability zones.

- Microsoft Azure SLA Overview https://azure.microsoft.com/cs-cz/support/legal/sla/summary/

- AWS SLA Overview https://aws.amazon.com/compute/sla/

Highly available application architecture

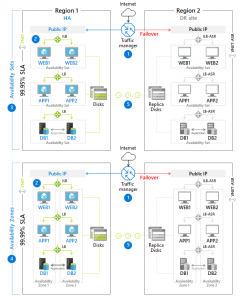

If I want to make applications truly robust and eliminate all possible risks (such as loss of datacenter), I need to architect component design across regions and zones. I also have to choose automated deployment and redeploy in case of loss or corruption. The figure shows an example ASP.NET and SQL Server multi-tier architecture scenario with two SLA variations:

- The first variant has an SLA of 99.95%: the components are distributed over two regions and the individual resources are within the availabilities of the set. All failover is handled by the Azure trafic manager described in the text below.

- The second variant has an SLA of 99.99%: it differs from the first variant by dividing the two regions into two more availabilities zones. This is a maximum SLA approaching 100% with a maximum downtime of 52 min 35 s per year.

The increase in SLA to 99.99% was achieved by dividing the resources into availabilities zones, i.e., two separate physical locations within a region, whereas an availabilities set is a location within a single physical location.

High service availability starts with regions and zones

Regions and zones are the basic building blocks of high availability of global service providers. For both key representatives of global service providers (Amazon Web Services and Microsoft Azure) this functionality is similar.

The region is a completely isolated environment that shares no infrastructure with any other region. Individual regions are connected only by backbone connectivity. Regions serve as global fault-domains (geographically separated and isolated datacenters, where the failure of one does not affect the other) and aim to completely separate the environment for business continuity & disaster recovery solutions.

It is usually not possible to natively connect two virtual servers from different regions over an internal network, but it is possible to replicate data between regions. Data connectivity between regions is usually charged for, but it is cheaper than internet connectivity.

By zone, we mean a specific data centre (or several nearby data centres in one location) within a region. Each zone is fully redundant and does not share any infrastructure elements (cooling equipment, diesel generators, network infrastructure, etc.) with another zone.

The zones are interconnected by a high-capacity network with minimal latency and connectivity within the zones is not charged. Virtual servers (or other services) can be located in different zones within a region and are connected by an internal network.

Overview of cloud components that are worth knowing

Redundancy of virtual instances

Azure VM Scale set or AWS EC2 Auto Scalingare auto-scaling services. They allow you to create and deploy hundreds of VMs in minutes with built-in load balancing and automatic scaling based on templates. By combining VMs and spot instances in one set, a great optimization of financial costs can be achieved. By combining a VM scale set and placing it in at least two availability zones, an SLA of 99.99% can be achieved.

Container redundancy

Azure AKS or Amazon EKS are Enterprise Kubernetes services that cover high availability, scalability, deployment process, management and evaluation scenarios. With support for modern applications that are developed as Microservices architecture and have full support in Kubernetes.

The solution also includes various balancing tools that can scale the number of running instances. I can add any type of VM from all available series to the AKS/EKS pool and it is possible to use spot instances that are optimal for processing batch jobs. For Build & Deploy I use the pipeline tools described below.

It’s good to know that deploying a Kubernetes cluster in the cloud doesn’t cost anything, only individual running VMs in pools are charged.

And what happens if your container “dies”? It instantly starts a new one (the same one) and you don’t have to worry about anything. It works the same way when the underlying VM crashes. You just need to distribute the underlying VMs and containers properly so that they are still in high 1+n availability during an outage. This model is ideal for both backend and frontend services.

We discussed this topic in detail in our last article.

Application Redundancy

Azure App Service is a very interesting alternative to Kubernetes cluster. It also allows you to run many instances of code or container wrapped in Docker. This web application scaling service has an SLA of 99.95% in the premium tier or dedicated isolated tier.

The service also includes a load balancer for load distribution and supports private endpoints for connection to the internal network. For publishing content to the Internet, it is usually used in combination with Azure Application Gateway as an L4/L7 application firewall. Like other services, CI/CD fully supports automatic deployment.

Azure Functions are similar to AWS Lambda and also allow you to run serverless code in container form. You pay for time and RAM used, and there are a relatively large number of free requests each month.

Azure Loadbalancer or AWS Elastic Load Balancing are platform services that load balance the distribution of incoming flows that arrive at the front-end services and load balance the back-end pool instances at Layer 4 (L4) of the Open Systems Proconnection (OSI) model.

In addition to traditional load balancers that operate at the L4 layer, cloud providers also provide more advanced application load balancers (in Azure Application gateway) that operate at the seventh layer (L7) of the OSI model, supporting some additional features such as cookie-based session affinity.

Traditional load balancers at the fourth transport layer operate at the TCP/UDP level and route traffic according to the source IP address and port to the destination IP address and port. In contrast, application load balancers can make decisions based on a specific HTTP request. Another example would be routing by incoming URL.

Network redundancy

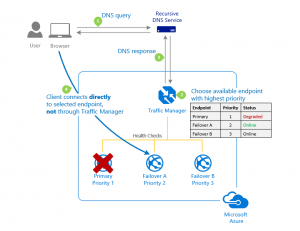

Traffic manager is a DNS-based load balancer that allows you to distribute traffic for applications accessible from the Internet across regions. It also provides high availability and fast response for these public endpoints.

What does it all mean? In simple terms, it is a way to direct clients to the appropriate endpoints. Traffic manager has several options to achieve this:

- priority routing

- weight routing

- performance routing

- geographic routing

- multivalue routing

- subnet routing

These methods can be combined to increase the complexity of the resulting scenario to make it as flexible and sophisticated as possible.

At the same time, the health of each region is automatically monitored and traffic is redirected if an outage occurs.

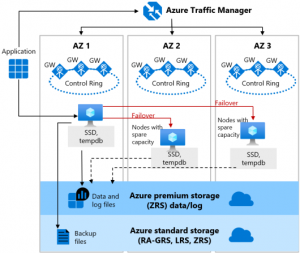

Redundancy RDS

Azure PaaS db services or Amazon RDS (Relational Database Service) offer platform database services including:

- MYSQL

- PostgreSQL

- Maria DB

- Oracle

- SQL server

- Aurora DB (AWS)

- Cosmos DB (Azure)

- SQL server Managed Instance (Azure).

- and others

For virtually all DB types, you can choose 1+n instances, select the type of HW on which the service will run, use Premium SSDs or Ultra SSDs. As with other services, it is possible to increase resilience by deploying across multiple availability zones, where we can get to an SLA of 99.99% in some scenarios. Another advantage of multiple replicas is the ability to use them to optimize read operations.

High availability and cloud storage

There are many types of storage in the cloud and we won’t go into detail about each one. Most of the repositories mentioned below have SLAs starting at 99.9% up to high availability scenarios with data spread across regions and zones with SLAs reaching 99.999%.

In Azure, these are Blob Storage, Azure Files, Azure Data Lake, and if high performance and low latency is needed, Azure NetApp Files.

In AWS, these services are Amazon S3, EFS Elastic File SystemandEBS Elastic Block Store as high-performance and redundant storage.

Even with the highest SLA storage, I have to take into account that I may lose data at some point. Although the probability is minimal (0.001%, i.e. “once every 10 years at most”), I still have to back everything up.

Content distribution

Azure CDN (Content Delivery Network) or Amazon CloudFront are services for global distribution of content such as web (including images), live stream, video or large files.

The service provides global balancing and caching functionality, having a storage so-called endpoint in virtually every country. This platform service is used in the design of modern web applications to distribute performance so that repeated downloads of large amounts of static data do not overwhelm web servers.

Components that no highly available modern application can do without

Highly available modern applications still use services like:

Message queuing is a service for queuing and exchanging messages, usually in XML or JSON format. The service is used, for example, to send and receive accounting documents between different systems.

In the cloud, we have the option to use Azure Service Bus, Amazon Simple Queue Service or the developer-favourite RabitMQ, which is also available as a PaaS.

Caches like Azure Redis Cacheor Amazon ElastiCacheare services that help optimize the retrieval of database content. This significantly speeds up the loading and running of web applications and also lightens the load on the database server.

Both services are available as PaaS and can be deployed across regions and availability zones.

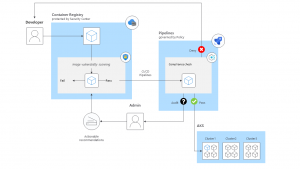

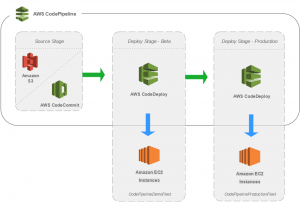

Automation of environment deployment (Build & Deploy)

When designing an HA architecture in the cloud, it is necessary to take into account the automation of the deployment environment to reduce the deployment time, while ensuring the sustainability of the code versioning. The ideal is to write everything as IaaC (Infrastructure as a Code) and manage the code in a CI/CD tool like Azure DevOps, Azure Pipelines or CI/CD AWS Pipelines.

This means that I store the code in a repository (GitHub, Azure DevOps), where I version and approve changes.Build & Deploy environment is done with pipeline tool first on test/stage environment, where I verify functionality, deployment speed and other defined parameters. Subsequently, I continue to deploy to the production environment. Everything is workflow driven and the whole process is defined and transparently managed. In case of an error, these tools allow you to compare different versions of the code and highlight changes.

For the record, some services like Azure App Service Plan allow you to deploy a newer version of code alongside the old one, and then switch between them, or flash back. It’s a very useful feature when I’m chasing every second of downtime at high SLA.

This all falls under a discipline called release management.

Chaos engineering testing

After building an architecture that we consider robust enough to meet the target SLA, it is a good idea tofind the resources and time to implement chaos engineering testing. We have already written about this in a previous article.

By testing probable failures on individual components, we can determine the real behavior of the application during partial infrastructure and configuration failures. Only in this way can we be sure that the architecture meets real needs.

Why single VM is not enough even though VM has 99.9 SLA

One final example: why isn’t a single VM enough? Even though one VM has a relatively high SLA of 99.9%, I still have to count on doing updates to the operating system, database and application services. Or there is some corruption of data, a disk operating system that does not have a fixed SLA, or any other interconnected component.

If a Scale set of at least two VMs is used, the SLA rises to 99.95% for the last running instance. By placing the Scale set in at least two availability zones, I achieve an SLA of 99.99% including the assigned disks.

So what do I have to deal with as an architect

There you go:

- networks

- domain records

- firewalls

- routing

- application frontend

- application backend

- scalesets

- database as RDS

- loadbalancers

- CDN

- message queuing

- application cache

- automatic deployment

- testing

High availability of services in brief

High Availability (HA) is natively supported in the cloud and can be deployed significantly fasterthan building environments across multiple datacenters in an on-premise environment. I can combine many high availability scenarios and service types in the cloud. Therefore, when designing a service architecture, it is essential to think not only about the high availability of a specific service, but always about the system as a whole, that is, the robustness and resilience of the entire solution.

You also need to take into account disciplines such as patchmanagementof all components, backup and recovery testing, i.e. have a disaster recovery solution whose recovery times also count towards the SLA.

And all this has to be covered bybusiness continuity management, where I evaluate all possible risks and impacts, on the basis of which the architectural design is adjusted, i.e. the life cycle of the application and the environment.

The primary advantage of cloud solutions is a detailed SLA description of all services, logging and monitoring, architectural blueprints and best practice service architecture design that can be used as a basis for design.

It is also necessary to deal with the automation of service deployment, describing everything as Infrastructure as a Code versus a solution operated in an on-premise environment, where broken hardware has to be ordered, installed and connected, in the cloud it is enough to “re-deploy”.

If you are interested in the issue of high availability, other articles from the Cloud Encyclopedia are certainly worth your attention, where you will find some topics related to high availability treated in more detail.

This is a machine translation. Please excuse any possible errors.