Cloud Management 2: Vulnerability Scanning – How to Prevent Security Threats in the Public Cloud

In the previous episode, we compared patch management in an on-premise environment with a cloud environment. In this article, we’ll take a look at security in the cloud, focusing on vulnerability scanning, patching and updating software and libraries, and continuously monitoring the health of our solution.

One of the main concerns companies have when moving to the public cloud is whether the move will reduce the overall security of their systems and sensitive data. I’ll try to avoid the false controversy about which environment is potentially (in)more secure, and instead focus on specific security threats in the public cloud and ways to manage them.

1) Misconfigured resources

Misconfiguration of cloud services can lead to many vulnerabilities. Examples include misconfiguration of cloud service accesses or misconfiguration of firewalls.

Incorrect configuration of access rules in S3 bucket

Quite regularly, there are media reports of sensitive data being leaked due to misconfigured access rules in the S3 bucket (data store in AWS). Sometimes this is outright shenanigans, where the S3 bucket is publicly accessible, but most of the time it is a case of misconfigured access rules (e.g. you just need to have any AWS account to access the S3 bucket).

Examples of known incidents.

- Capital One (July 2019): an attacker stole the personal and financial information of more than 100 million customers and gained access to sensitive data because Capital One misconfigured the S3 bucket and left it publicly accessible.

- Uber (2016): A misconfigured Amazon S3 bucket caused a data breach that affected more than 57 million customers and drivers and led to the disclosure of personal data.

- Verizon (July 2017): A misconfigured S3 bucket operated by a third party caused the personal data of 6 million customers to be leaked. The incident involved the exposure of data such as customer names, addresses, and identification numbers.

- Dow Jones (July 2017): Due to a misconfigured S3 bucket, the company accidentally exposed the personal information of more than 2.2 million of its customers.

- Accenture (September 2017): The company accidentally left four S3 buckets publicly accessible, resulting in the exposure of sensitive data, including company passwords and system access credentials.

You can see other well-known cases in these articles.

Misconfiguration of firewalls

Although cloud environments allow network microsegmentation using AWS Security Group or Azure Network Security Group, it happens that firewalls remain completely open to the whole Internet (or “only” some important ports like 22 or 3389).

(Comrades from not-so-friendly countries are just waiting to connect to your server. On purpose, create a publicly accessible server, let it run for one hour, and check the log for the number of failed connection attempts. My guess is that you’ll see thousands of connection attempts, because there are bots lurking on the internet to crack any available system.)

As a general rule, no system should be publicly accessible (i.e. have a public IP address) unless absolutely necessary. And if it is necessary, it should only be made available to known IP addresses.

Cloud platforms offer us (often for free) tools and recommendations on how to secure our systems in the cloud. But it is up to us to know about them and to implement them.

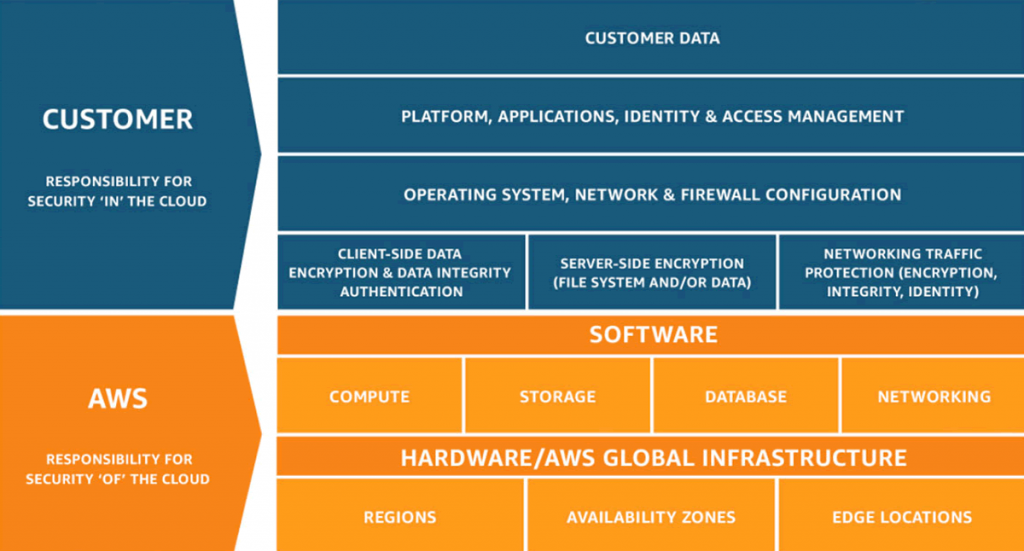

All major clouds define some form of shared responsibility model in which they say they take responsibility for the security of the cloud itself. However, the responsibility for the configuration of the cloud and the applications that run on it lies with the customer (see 8 principles to ensure cloud security).

We discuss how to verify your cloud settings later in Scanning Cloud Configuration.

2) Bad authentication and authorization settings

Once an attacker gains access to a user’s account, they can also gain access to sensitive data and resources. Weak passwords, insufficient authentication and authorization, and other factors are usually to blame.

Multi-factor authentication (MFA) should be required as a complete baseline when logging into the cloud. So, in addition to your name and password (or access keys), you will need a one-time password (OTP), which will be generated by an app on your mobile phone or sent to you via email (or SMS).

You can also only allow access to the cloud from certain IP addresses.

Cloud platforms provide tools to monitor and detect suspicious activity, including login attempts from unusual geographic locations or at unusual times of day. These tools allow you to monitor events and alert you to suspicious activity.

3) Bugs in software and libraries

Attackers can also exploit known vulnerabilities in outdated software and libraries. These can be caused by poor code implementation, vulnerabilities in software libraries or misconfiguration.

We cover ways to access known vulnerabilities in software in a separate section, Scanning for vulnerabilities in applications.

4) Insufficient data security

Inadequate data security can lead to the theft of sensitive information. Poor encryption, inadequate data storage or inadequate access controls are often to blame.

In theory, I should convince you that the only sound concept to ensure better security of stored data is to minimize user access rights. Personally, however, I prefer a different approach: giving users the maximum possible rights to use the cloud meaningfully and independently, but with the assumption that all users are properly trained and aware of the risks associated with the cloud environment.

This can be elegantly addressed by creating multiple cloud environments for individual applications/teams. Everyone then “plays on their own turf” and if they “break something” it doesn’t affect others.

If some sensitive data should not be accessed even by the cloud administrator, we need to encrypt the data on the client side (that is, in the application) and store the encryption keys outside the cloud itself (e.g. external HSM).

5) DDoS attacks

Distributed Denial of Service (DDoS) attacks are common in the public cloud. Attackers use many devices to send a large number of legitimate requests to a target server, which can cause application unavailability and service failure.

Still, using the cloud to minimize the impact of a DDoS attack is the way to go for several reasons:

- Cloud platforms have a “wealth” of experience with DDoS and offer services to help with protection (AWS Shield, Azure DDoS Protection, Google Cloud Armor).

- Cloud platforms have massive connectivity to the internet that cannot be as easily overloaded as an internet connection to an on-premise datacenter.

- Application autoscaling can be set up in such a way that it can absorb the increased number of requests until the attack stops.

6) Poor API management

APIs (Application Programming Interfaces) are an increasingly important part of modern applications and systems, so it is important to ensure their security.

Various security issues can arise when managing APIs – for example, improper authentication and authorization can allow an attacker to access API functions to which they are not authorized. Unauthorized access can also occur if an attacker obtains access credentials from an authorized user or finds a vulnerability in the API that can be exploited.

To create APIs in the cloud, we should use dedicated services (AWS API Gateway, Azure API Management, GCP API Gateway), integrate them with other services for strong authentication and authorization (AWS Cognito, Azure AD), set proper rate limits (number of requests in a certain period of time to prevent users from bombarding our APIs), or we can also use Web Application Firewall to protect against application layer attacks.

Vulnerability scanning

Vulnerabilities in the cloud can be divided into two main categories: cloud configuration issues and vulnerabilities in the software we run in the cloud. It is important to stress that proper cloud protection requires a combination of preventive measures in both areas.

Cloud Configuration Scanning

Public clouds have mechanisms (AWS Service Control Policies, Azure Policy) that can be used to completely disable certain activities. For example, they will not allow a user to create a subnet accessible from the Internet, so they will not be able to create a server that is accessible from the Internet.

There are even pre-made sets of policies that you can be compliant with, for example, ISO standards or security benchmarks.

However, there may be cases where we don’t want to (or can’t) explicitly disable something, but still need the configuration to meet certain requirements, such as the PCI DSS standard. In AWS, we use the AWS Config service to track the configuration of our AWS environment and how it changes.

Scanning your AWS environment with AWS Config is used to identify configuration changes that may indicate a security risk or policy violation, so we can quickly identify and respond to issues. AWS Config can create alerts or trigger actions to automatically fix the issue.

In addition, AWS Config can also help with auditing and change history by storing the configuration history of your environment. This makes it possible to view configuration changes retroactively and check who made the changes and when (which can be useful for auditing).

When integrated with other AWS services, AWS Config will greatly improve the monitoring of your AWS environment and identify potential security risks and configuration issues.

In the Azure world, the Change analysis tool works analogously, looking for configuration changes to supported resources.

CVE (Common Vulnerabilities and Exposures)

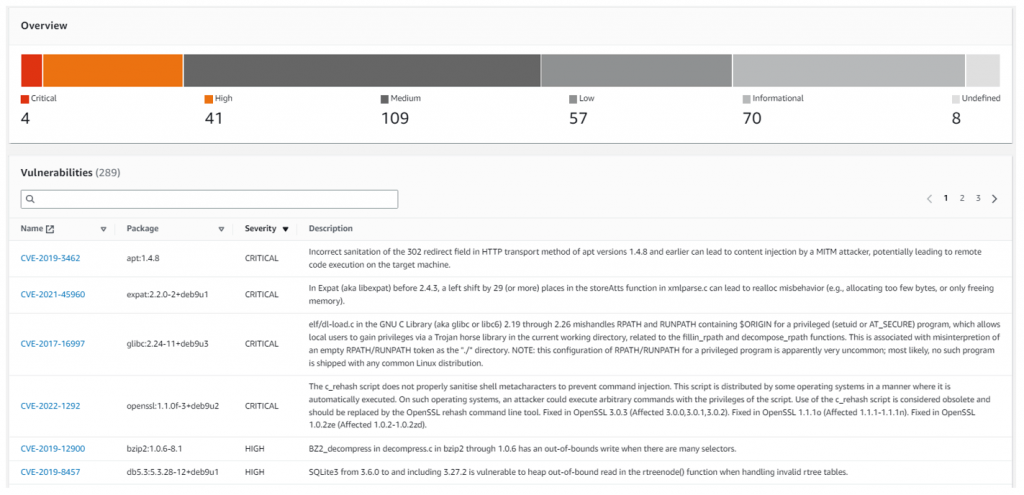

CVE is a program for identifying, describing and recording publicly known cyber vulnerabilities. Each discovered vulnerability is classified with respect to its severity (critical, high, medium, low, none) and stored in the CVE database (at the time of writing, it had 203,653 entries).

The CVE database was created to standardize vulnerability reporting and provide users with an easy way to identify potential security threats and minimize risks.

In recent years, alternatives to the CVE database have emerged that attempt to address some of its shortcomings (e.g., the problem of speed of vulnerability disclosure). However, the CVE database still remains a key tool for security experts and organizations worldwide.

Scanning for vulnerabilities in applications

There are a number of tools and solutions for vulnerability scanning. It’s just a matter of picking one and starting to use it (Vulnerability Scanning Tools, Orca Cloud Security, Amazon Inspector, Azure Defender for Cloud and more).

Normally, security scanning is done at the beginning when writing application code. Then eventually the container is scanned when it is uploaded to the repository, but that’s about it. Who would bother with regular security scanning? After all, we have a perimeter firewall, so no one can get to us (plus we’re already busy).

Here I would like to point out that hackers are making billions of dollars worldwide and are therefore highly motivated to improve. We, on the other hand, should be equally motivated to use all available means to reduce the attack surface.

It is not enough to update the OS every 3 months because a standard requires it. We should continuously know what state our system is in with respect to security, the platforms used and our own applications.

Cloud environments can easily be set up to perform security scanning at regular intervals (1x daily, 1x weekly). If a new vulnerability is discovered that meets our defined level (e.g. high/critical), we get an automatic notification via email, Slack, or otherwise.

We need to remove a serious vulnerability as quickly, safely and easily as possible, which usually means updating the OS, platform or application and redeploying the application.

CI/CD + Infra as Code

If we have a properly configured CI/CD pipeline and are able to deploy new versions without system downtime, patching the application is not a challenge.

If it is:

- a vulnerability in the cloud configuration, we’ll modify the IaC scripts,

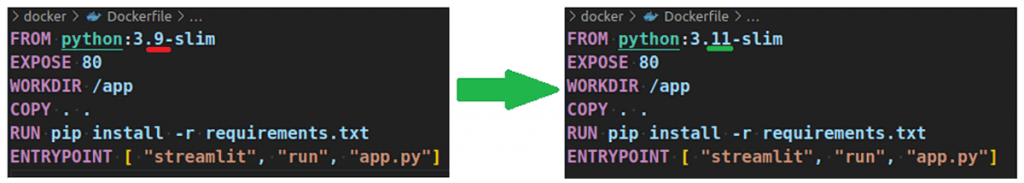

- a vulnerability in the operating system, just update the IaC scripts in the VM or Docker base image to the OS version where the vulnerability is resolved,

- a vulnerability in the application libraries, you need to update the libraries to new versions and rebuild the application,

- a vulnerability in the application code itself, you need to make adequate code changes and rebuild the entire application.

After each such intervention, we need to be sure that our application is still working and that we haven’t caused any more bugs. Automated tests covering the “critical” functionality of the application will help us with this. They should be part of every CI/CD pipeline and tests should always go deployment to production.

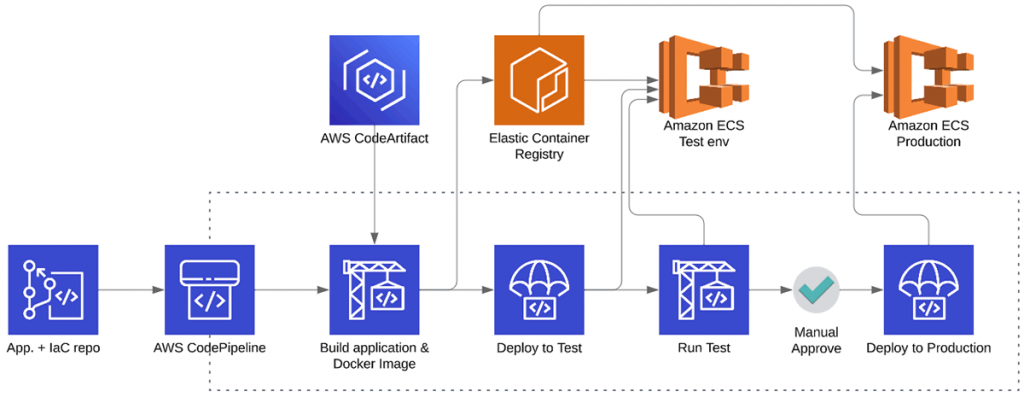

The diagram below shows the minimum number of steps a CI/CD pipeline should take to successfully deploy a new version of an application. Manual approval prior to deployment to production is optional, but I have yet to personally experience a project where deployment is completely automated.

Conclusion to vulnerability scanning

We can basically repeat the above process over and over again as new vulnerabilities appear in our solution. So sooner or later, the effort invested in the CI/CD pipeline will pay off.

Thus, we don’t have to worry about implementing new security processes that would generate significantly more work for administrators without automation. In our case, however, it’s just a matter of updating a few scripts and committing to Git – the CI/CD pipeline does the rest for us. The important thing is to know when to do these updates.

Vulnerability scanning is a necessary step to ensure the security of your systems and data in the public cloud. This process allows you to identify potential vulnerabilities in your computer systems and allows you to take action to address them.